We've built commerce backwards for decades.

A customer lands on a product page. They see a single hero image—maybe a few angles if they're lucky. The image was shot months ago, approved by a committee, and frozen in time. It doesn't know who's looking at it. It doesn't adapt. It just sits there, hoping to convert.

This is the visual equivalent of showing everyone the same billboard and wondering why conversion rates plateau at 2-3%.

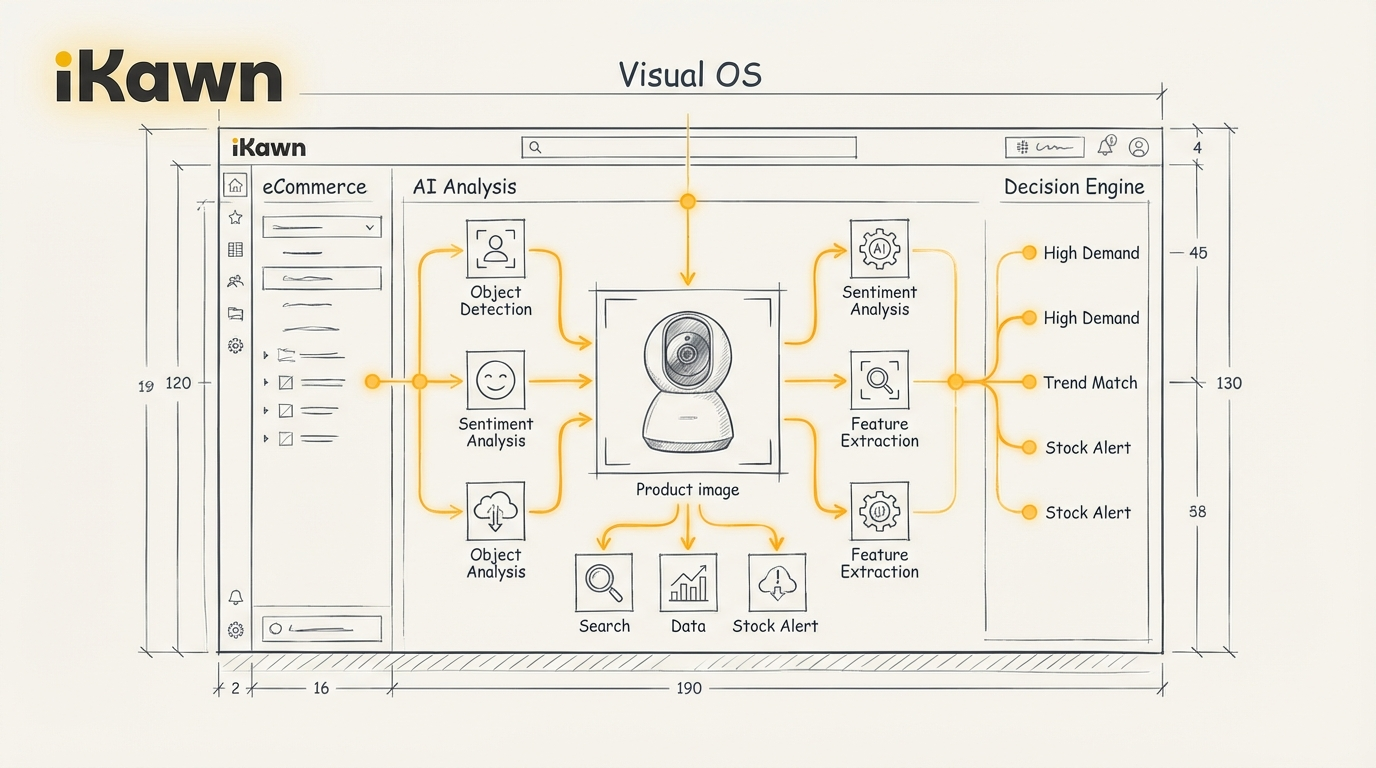

Visual OS flips this entirely. Instead of static assets, every visual becomes a living decision point that understands context, adapts to the viewer, and optimizes for outcomes in real-time.

The Problem: Visual Content as a Bottleneck

Most businesses treat visuals as a creative problem. They hire photographers, brief agencies, run photoshoots, edit assets, resize for different channels, and upload to DAMs. By the time an image goes live, it's already outdated—market trends shifted, inventory changed, or a competitor launched something better.

The production cycle alone creates lag. A fashion brand might shoot spring collections in December. A furniture company photographs products that won't ship for months. A B2B SaaS platform uses the same hero image for a year because commissioning new creative is expensive and slow.

But the deeper issue isn't production—it's that static visuals can't respond to the single most important variable in commerce: who's looking.

A 25-year-old sneakerhead and a 55-year-old marathon runner land on the same running shoe page. They see identical images. Same angles. Same styling. Same context. The brand has no way to show the sneakerhead the streetwear appeal and the runner the technical specs—at least not without manually creating dozens of variants and hoping their segmentation logic works.

Visual OS solves this by making images intelligent.

How Visual OS Works: Adaptive Visual Intelligence

At its core, Visual OS treats every image as a canvas that can be generated, modified, or contextualized in real-time based on who's viewing it, what they've done before, and what outcome you're optimizing for.

Here's what that means in practice:

1. Contextual Generation Instead of pre-creating every possible image variant, Visual OS generates visuals on-demand. A luxury watch brand doesn't need 50 lifestyle shots—they need one product and a system that can place it in a boardroom for executives, on a yacht for aspirational buyers, or on a wrist during a marathon for athletes.

The AI doesn't just swap backgrounds. It adjusts lighting, shadows, reflections, and composition to make each scene feel authentic. The executive sees the watch in natural office light. The athlete sees it in motion blur with sweat detail. Same product. Different context. Zero photoshoot.

2. Real-Time Personalization Visual OS tracks micro-signals—scroll depth, hover time, previous browsing behavior, device type, time of day—and adjusts visuals accordingly.

A customer who lingered on sustainability content sees products in eco-friendly contexts. Someone browsing late at night on mobile gets bold, high-contrast visuals optimized for tired eyes and small screens. A repeat visitor skips the generic hero shot and sees the new colorway they haven't explored yet.

This isn't A/B testing. It's adaptive rendering. Every session gets a unique visual experience optimized for conversion likelihood.

3. Cross-Industry Application While we're focused on ecommerce today, Visual OS has implications far beyond product pages.

Real estate platforms could show properties in different seasons, times of day, or with furniture staged to match the viewer's aesthetic preferences. Healthcare providers could visualize treatment outcomes or medical devices in context relevant to specific patient demographics. B2B software companies could show their dashboards populated with data from the viewer's industry.

The moment you treat visuals as dynamic rather than static, every industry with a visual component becomes addressable.

The Technical Foundation: Why This Works Now

Visual OS isn't just better creative tooling. It's AI models that understand composition, context, and commerce outcomes at scale.

We've trained models on millions of product images, conversion data, and contextual signals. The system learns what works—not just aesthetically, but commercially. It knows that certain lighting drives luxury perception. That specific angles increase add-to-cart rates. That contextual backgrounds reduce bounce on mobile.

But more importantly, it learns continuously. Every interaction feeds back into the model. A/B tests run automatically across thousands of variants. Winners propagate. Losers get pruned. The system gets smarter with every session.

This is only possible now because:

- Generative AI reached production quality for commercial imagery

- Real-time inference costs dropped below the marginal revenue gain per session

- Edge compute enables sub-100ms rendering without server round trips

Five years ago, this would've been too slow and too expensive. Today, it's faster and cheaper than traditional creative workflows.

What This Unlocks: From Cost Center to Revenue Driver

Brands currently treat visual content as a cost center. You spend on photoshoots, retouching, and storage, then hope the assets perform.

Visual OS inverts this. Visuals become a revenue multiplier because they adapt to maximize conversion for every viewer.

Early customers see:

- 40-60% reduction in creative production costs (fewer photoshoots, no manual resizing)

- 15-25% lift in conversion rates (contextually relevant visuals outperform generic ones)

- 10x faster time-to-market (generate new visuals in minutes, not weeks)

But the real unlock isn't efficiency—it's possibility.

A small brand can now compete visually with billion-dollar competitors. A niche B2B company can personalize visuals for every vertical they serve. A marketplace can give every seller intelligent visual tools without requiring design expertise.

The Broader Vision: Visual Intelligence Everywhere

Visual OS is the foundation, but it's not the end state.

We're building toward a world where every visual surface—product pages, emails, ads, packaging, in-store displays—adapts intelligently to context and outcome.

Imagine walking into a retail store where digital signage recognizes your past purchases and shows complementary products. Or receiving an email where the hero image adjusts based on what you clicked in the last campaign. Or seeing an ad that reflects the specific problem you searched for an hour ago.

This isn't surveillance. It's relevance. And relevance drives value for both sides—customers see what matters to them, businesses convert more efficiently.

Visual OS is step one. It proves the model works in ecommerce. But the same principles apply anywhere visuals drive decisions—real estate, healthcare, education, entertainment, B2B sales.

We're starting with commerce because it has the tightest feedback loops. Every image either drives a transaction or it doesn't. You know within minutes if something works.

But once the foundation is solid, the applications are nearly limitless.

What's Next

Visual OS is live. Customers are using it to generate and personalize product imagery at scale. We're seeing conversion lifts that prove the model works.

But this is just the visual layer. Next, we layer on personalization logic that adapts the entire experience—not just images, but copy, layout, offers, and flows. Then we add intelligence that predicts what each customer needs before they know it themselves.

Visual OS. Personalization OS. Intelligence OS.

Each builds on the last. Each unlocks new value. Together, they form something bigger—a commerce operating system that makes every interaction smarter.

We're just getting started.